Project 1

In this project, I created an automated system to combine separately captured RGB image channels into a single aligned RGB image.

My implementation makes use of a Normalized Cross Correlation (NCC) metric to measure the alignment of the channels of the image. The idea being that each color channel should be mostly correlated with each other. Additionally, to run more quickly, my program constructs an image pyramid with six levels of exponentially decreasing size. This means that I can compute a coarse alignment with low resolution versions of the images, then increase the scale and refine that alignment. Because I can assume each step's alignment to be fairly good since it starts from a previous estimate, my search space of possible alignments can be much smaller than the traditional brute force search. Additionally, the smaller images in the pyramid can be aligned faster, meaning that my program completes much faster than the brute force approach.

Below, you can see the results of my algorithm on the provided image set.

| Name | Alignment | Image |

|---|---|---|

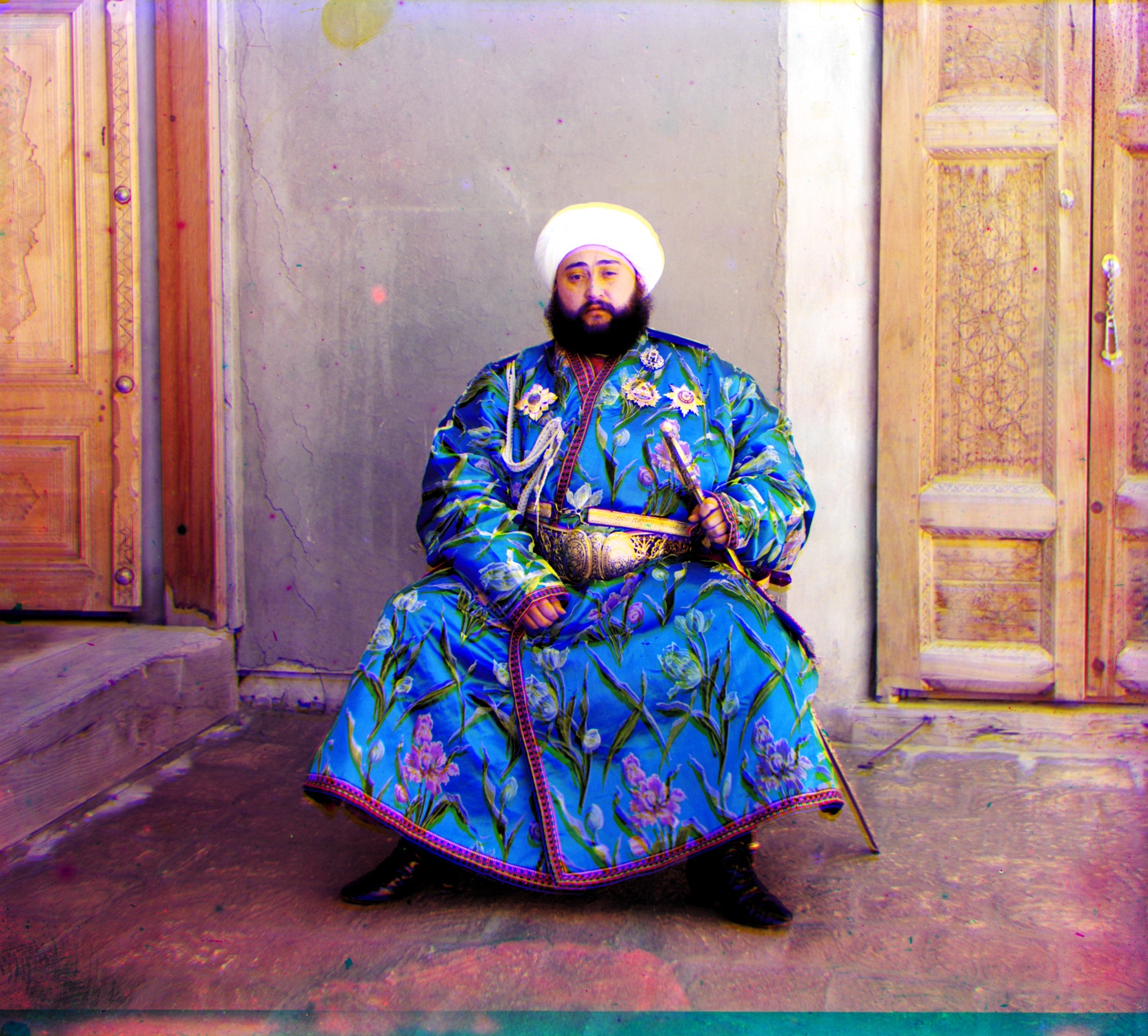

| Emir | Green (-17, -57) Blue (-44, -80) |

|

| Harvesters | Green (3, -65) Blue (-13, -116) |

|

| Icon | Green (-5, -48) Blue (-23, -81) |

|

| Lady | Green (-3, -62) Blue (-11, -104) |

|

| Self Portrait | Green (-8, -98) Blue (-37, -168) |

|

| Three Generations | Green (3, -58) Blue (-11, -104) |

|

| Train | Green (-27, -43) Blue (-32, -79) |

|

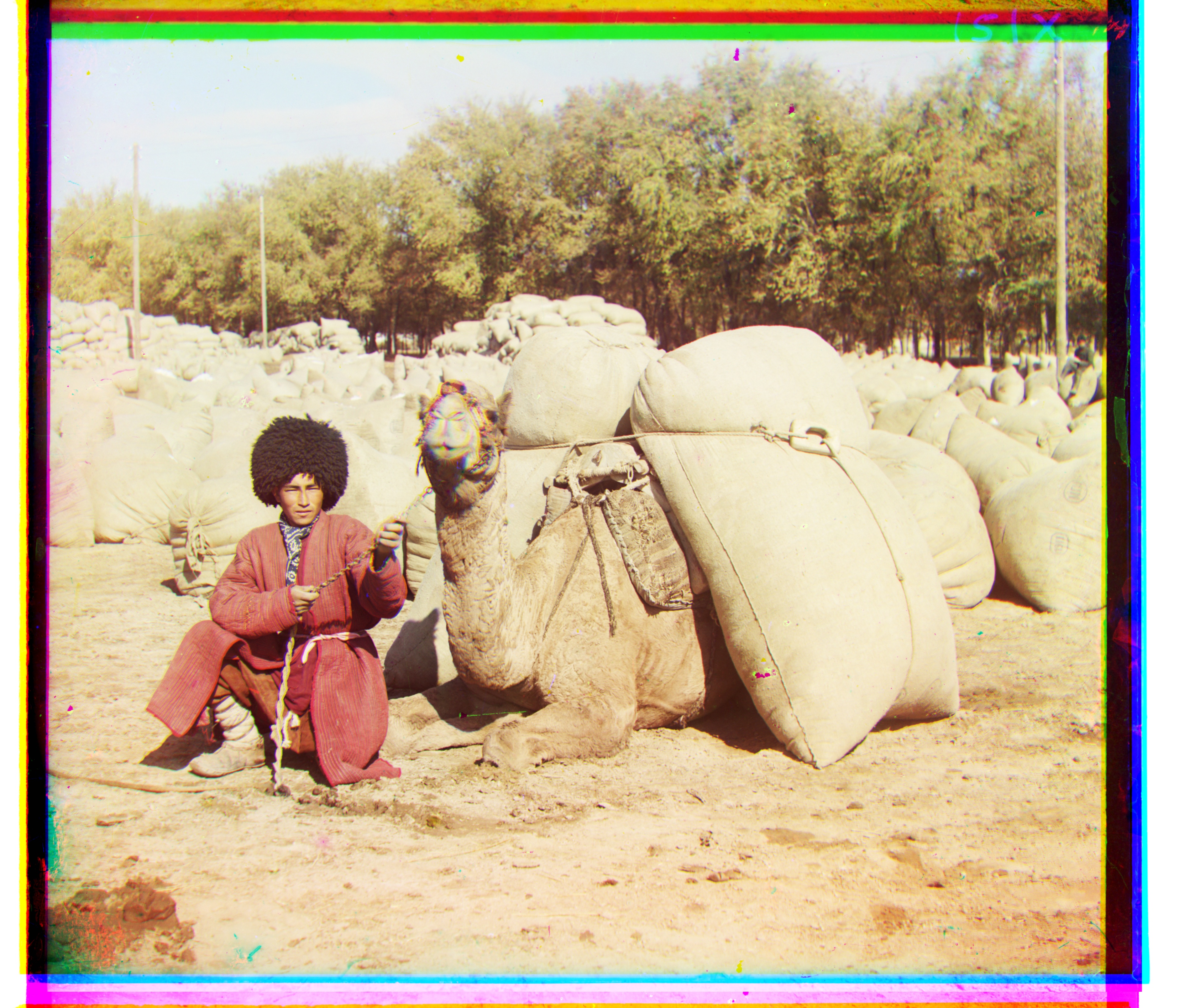

| Turkmen | Green (-7, -60) Blue (-28, -108) |

|

| Village | Green (-10, -72) Blue (-22, -129) |

|

Generally, my implementation appears to work well on the provided test images. I noticed the worst alignment with the Emir image, where a color fringe appears above his hat and below his beard. When originally testing with a Sum of Squared Differences alignment metric this image did not have this artifact. My guess is that SSD was more heavily influenced by the high contrast between the white hat and black beard. NCC on the other hand is more robust to brightness changes, and thus this section of the image probably didn't have as much influence in deciding the ideal alignment.

Another important part of my implementation was making sure the edges of the plates were cropped out when computing alignment. When the borders were included, they had a significant negative impact on the quality of the alignment, likely because, being large horizontal and vertical lines, misaligning the borders had a bigger influence than the features within each image. For large images, I use a 256 pixel border policy, which ensures that only the inner image pixels contribute to the alignment calculation. Additionally, because alignment only computes a pixel offset, I can use it to align the original uncropped images. In the bonus section, I discuss how I further removed the image borders.

Other Images

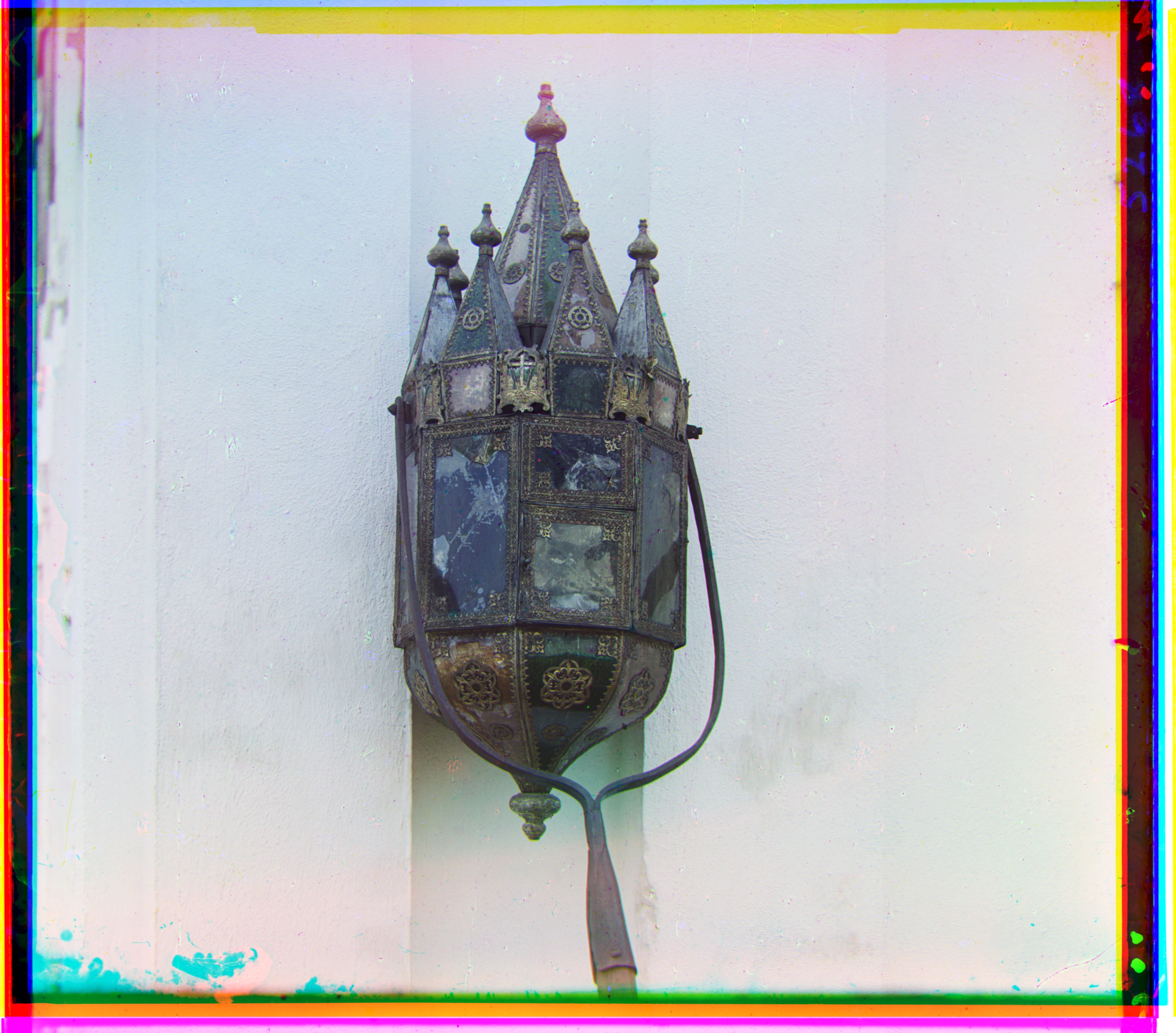

Here are a few other images I picked out from the Prokudin-Gorskii collection.

| Name | Alignment | Image |

|---|---|---|

| Cabin | Green (-20, -90) Blue (-43, -155) |

|

| Flowers | Green (18, -47) Blue (25, -87) |

|

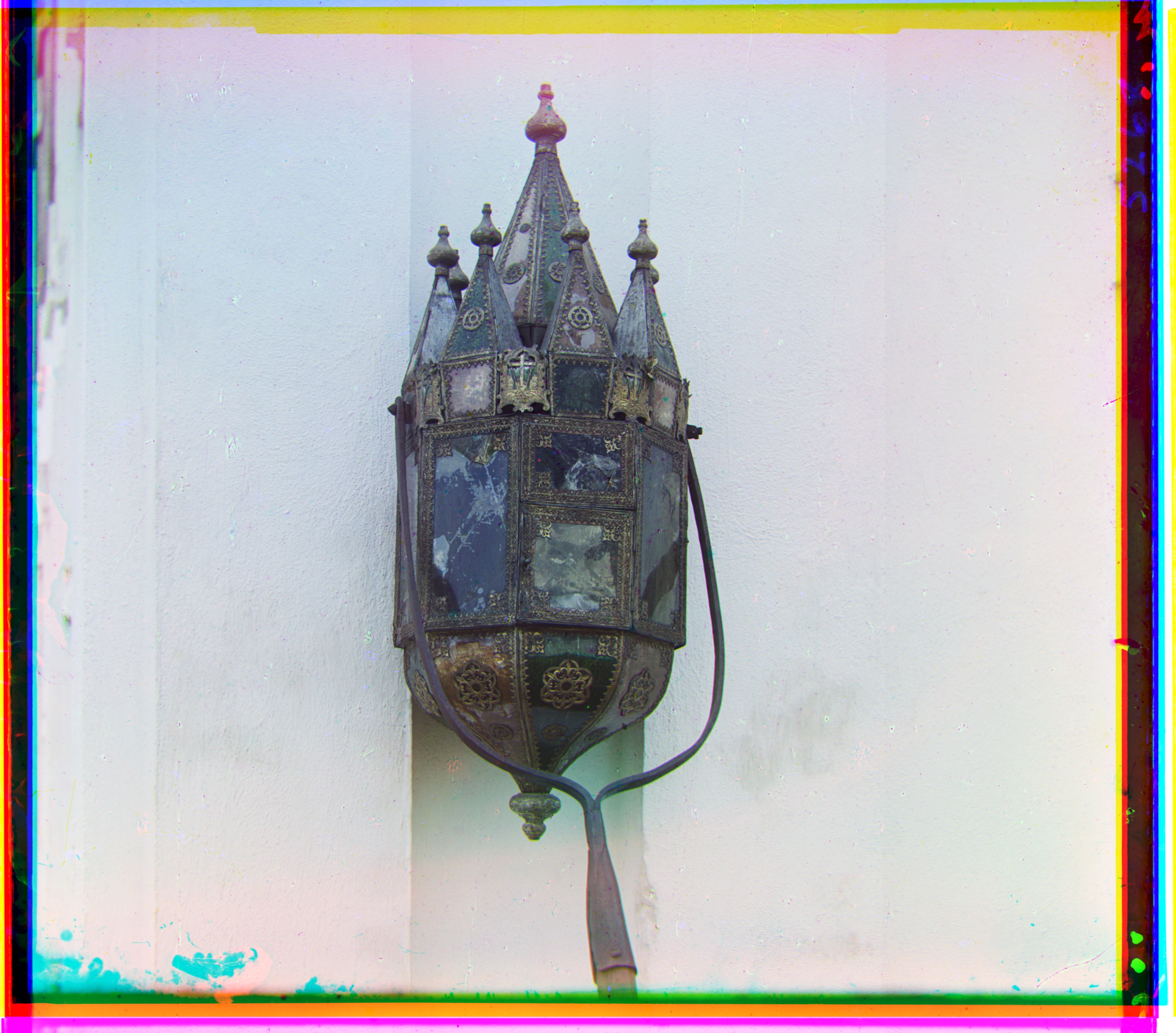

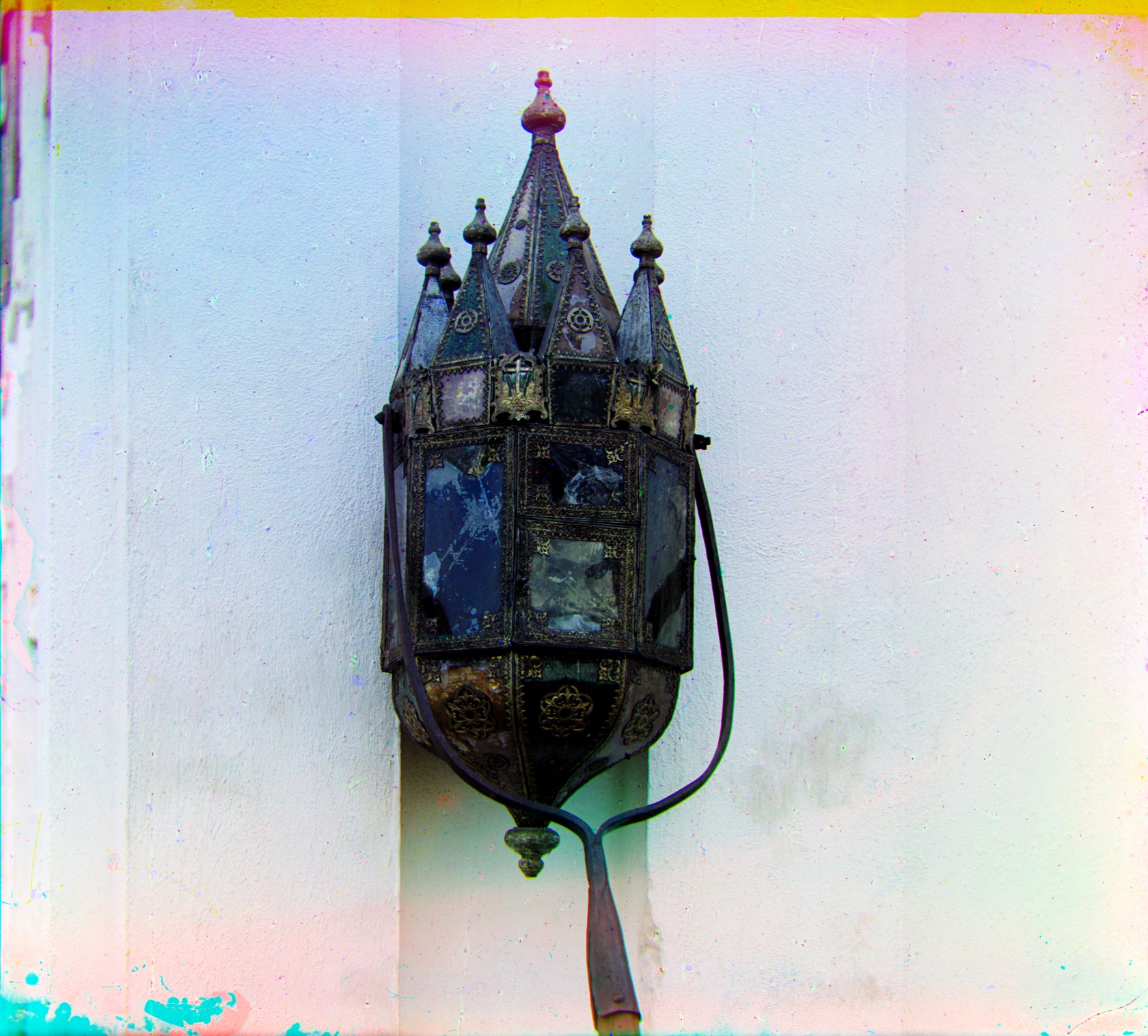

| Lantern | Green (-13, -54) Blue (-25, -51) |

|

Extra Stuff

In addition to implementing the above algorithm, I did make a few attempts to improve it.

Border Cropping

The most notable improvement my implementation adds, is the ability to automatically crop out areas along the edges of these images where the color channels do not align. I noticed in the scans that in these regions, at least one channel is entirely black or entirely white. I thresholded out pixels I assumed to be valid, and then created a mask where the red green and blue channels were all valid. To improve my detection, I also eroded the mask pixels with a 63x63 kernel. Erosion is an operation which depends on the neighbors of a pixel, and so it helps to reduce our mask down to only areas which consistently fit the color threshold, which is generally the center. Finally, I identify the smallest rectangular region to fully contain my mask, and crop to that section.

While not perfect with some of the color fringing, my automatic cropping is able to remove most of the white borders along the images from the scans. It does tend to fail in cases though where the border has noise, or there is writing in one of the channels. A further heuristic worth trying may be to crop the image to areas where the three color channels are correlated.

Increased Contrast

To improve the quality of the output images generated by my program, I took steps to increase the contrast with regards to luminanace and saturation. Specifically, I converted the cropped RGB image to HSV space, then scaled the saturation and value channels so that the first percentile and 98th percentile became the new minimum and maximum values. Choosing along a percentile rather than calculating minimum and maximum helped to avoid outliers in each channel from skewing this scaling procedure. Additionally, performing this contrasting step in HSV space helps to preserve object colors since the hue channel is unchanged. Overall, this procedure helps darken certain black segments of the image which originally appeared grey (such as people's coats) as well as increase the color saturation overall, giving more aesthetically pleasing results.

Below are a few comparisons demonstrating the improvements added.