16-889: Learning for 3D Vision

Assignment-1

Aditya Ghuge aghuge

Late days used: 0

1. Sphere Tracing (30pts)

a short writeup describing your implementation.

At start I have initialized few variables for my own convenience

masks, points, ray_points of size Nx1, Nx3 Nx3 respectively. I set each point of mask to 0, of points

to garbage value(-11) and ray_points

to points of origin. I also consider variable eps of value 1e-5 to threshold

First I run a loop for max_iter iteration

In each iteration firstly I compute signed distance

function of ray_points using implicit_fn.

I find indices where the Signed distance

is less than threshold. For those indices I set the mask value to one and

update point value to ray_points value. Later we update

the ray_points magnitude by signed_distance

value thus increasing the ray_points.

2. Optimizing a Neural SDF

3. VolSDF

In your write-up, give an intuitive explanation of what the

parameters alpha and beta are doing here. Also, answer the following

questions:

Ans) Parameter alpha is modelling density of the object inside the surface. beta is responsible for how density(sigma) value changes as we from inside to outside of the surface of the object.

- How does high beta bias

your learned SDF? What about low beta?

Ans) Sharpness of the drop of Density is controlled be (1/beta). Having high value of beta will make dropping of

intensity value in less sharp manner after the surface of the object which is

not ideal as there should be sudden drop. Thus we will

have density value present at volumes where there is no object present which is

not correct. A low beta term will facilitate this ideal behavior as it will

cause a sudden drop as we move from inside to outside the surface. Having high

beta will give smooth gradients as the intensity drop is less while low beta

provides sharp drop. So the gradients values would be

obtained closely near the surface

- Would an SDF be easier to train

with volume rendering and low beta or high beta?

Why?

Ans)

SDF with volume rendering and high beta will be easier to train as it will

provide smooth gradient values across a small range of the surface as the

density drop is not sharp

- Would you be more likely to

learn an accurate surface with high beta or

low beta? Why?

Ans)

SDF with volume rendering and low beta will learn an accurate surface as it

will provide sharp density change along the surface of the object.

4. Neural Surface Extras

4.1. Render a Large Scene with

Sphere Tracing

Using python -m a4.main --config-name=custom_spheres

4.2 Fewer

Training Views

100 Views 20views 20views NeRF

Comparing VolSDF with NeRF on fewer images.

As we can see NeRF can capture more detailed information from fewer images than VolSDF. For example the top red portion on the bulldozer is visible in NeRF but not clearly captured in VolSDF. also the NeRF images are less blurry as compared to VolSDF as seen from the back of the bulldozer. As we increase the number of view for VolSDF the representation is also improved. The reconstruction obtained is more sharp and captures more finite details as evident from above gifs. Also the geometry is improved.

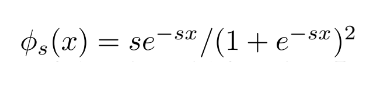

4.3 Alternate SDF to Density

Conversions

I have implemented the Naïve method as visible VolSDF produces better results.

Was the function used with s =100

Was the function used with s =100