Project Description

In this project, we implement a few different techniques that manipulate natural images. First, we invert a pre-trained generator to find a latent variable that closely reconstructs the given real image. In the second part, we take a hand-drawn sketch and generate an image that fits the sketch accordingly. Finally, we generate images based on an input image and a prompt using stable diffusion.

I. Inverting the Generator

First, you solve an optimization problem to reconstruct the image from a particular latent code. Natural images lie on a low-dimensional manifold. We choose to consider the output manifold of a trained generator as close to the natural image manifold. So, we can set up the following nonconvex optimization problem: \[ z^*=\arg\min_z\mathcal{L}(G(z), x)\] We know that the standard $L_p$ losses do not work well for image synthesis tasks. So we apply a combination of $L_p$ and perceptual (content) losses. \[ \mathcal{L}(G(z), x)=w_\text{l1}\cdot\mathcal{L}_\text{l1} + w_\text{perc}\cdot \mathcal{L}_\text{perc}\] As this is a nonconvex optimization problem where we can access gradients, we solve it with any first-order or quasi-Newton optimization method (e.g., LBFGS). As these optimizations can be both unstable and slow, we run the optimization from many random seeds and take the stable solution with the lowest loss as our final output.

Image reconstructions from optimized latents

The StyleGAN gives better results than DCGenerator even though only the latent z is provided as input. When comparing the results among different input form z/w/w+, w+ gives the best result. This is because w+ space is a much higher-dimensional space than z and w, thus it can capture more information in the image. As for the ablation study among different combinations of losses, we find that l1 & perception losses gives the best results and perception loss plays an important role. This is because perception loss is constructed with intermediate features from multiple layers in a VGG-19 network, which can capture information at various abstraction levels. The DCGenerator takes ~1 mins to optimize 1000 iterations, while StyleGAN takes ~2 mins to finish.

II. Scribble to Image

Next, we would like to constrain our image in some way while having it look realistic. We initially develop this method in general and then talk about color scribble constraints in particular. To generate an image subject to constraints, we solve a penalized nonconvex optimization problem.Written in a form that includes our trained generator \(G\), this soft-constrained optimization problem is \[ z^*=\arg\min_z \sum_i ||f_i(G(z))-v_i||_1 \] Given a user color scribble, we would like GAN to fill in the details. Say we have a hand-drawn scribble image \(s \in \mathbb{R}^d\) with a corresponding mask \(m \in {0, 1}^d\). Then for each pixel in the mask, we can add a constraint that the corresponding pixel in the generated image must be equal to the sketch, which might look like \(m_i x_i = m_i s_i\). Since our color scribble constraints are all elementwise, we can reduce the above equation under our constraints to \[ z^*=\arg\min_z \sum_i ||M*G(z) - M*S||_1 \] where \(*\) is the Hadamard product, \(M\) is the mask, and \(S\) is the sketch. Here are some results:

The first five rows are generated from provided sketches, and the last two rows are generated from sketches drawn by myself. We can see an obvious correspondence between the generated image and the sketch that it is generated from. It is important to use natural colors that may offen appear on a cat face (like pink, brown, blue for eyes, etc) and to provide reasonably structured sketches in order to get good results. When the sketch is far from the shapes of the cat faces that appear in the training set, the StyleGAN will fail to generate an image that follows the sketch.

III. Stable Diffusion

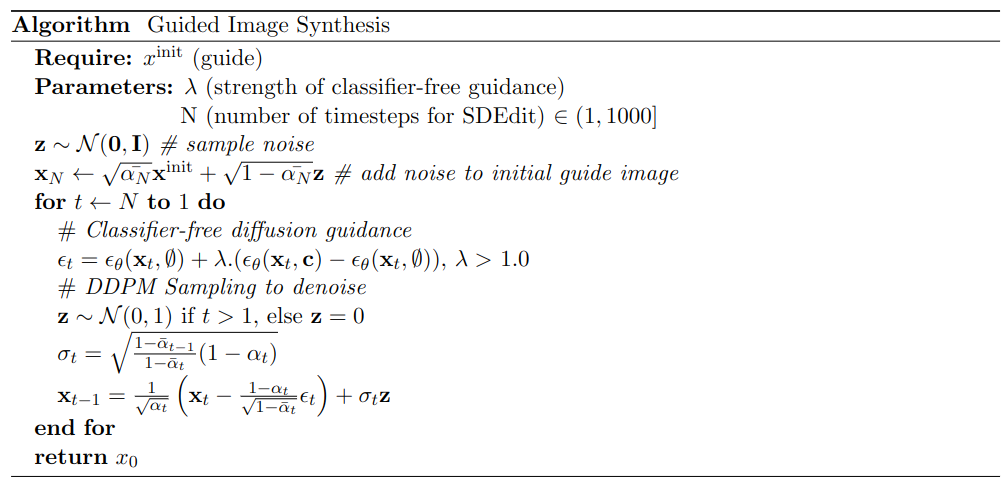

Stable Diffusion uses a diffusion process that transforms initial noise into detailed images by iteratively denoising the image, conditioned on the text embedding from text prompts. In this part, we modify this approach by incorporating an input image along with a text prompt. This approach is similar to SDEdit, in which the image input serves as a "guide", transforming the given input image into noise through the forward diffusion process instead of starting with random sampling and then iteratively denoising to generate a realistic image using a pre-trained diffusion model. In this part, we extend SDEdit with a text-to-image Diffusion model. We use the DDPM sampling method with the Classifier-free Diffusion Guidance. Here is the pseudocode of our final approach:

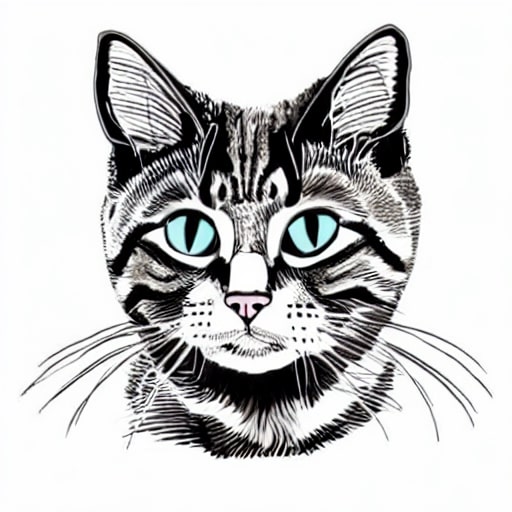

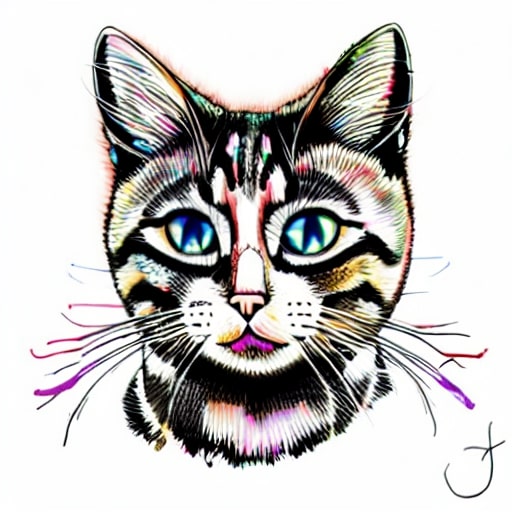

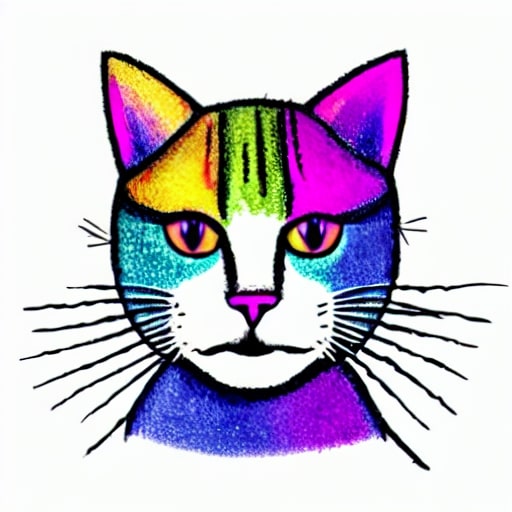

Here are some results of our Diffusin-based Guided Image Synthesis method:

Here we use the above example input image and text prompt to do an ablation study on different the amount of noise added to the input image latent. We control the amount of noise by changing the timestep that the diffusion starts. The larger the $\text{timestep}$ is, the more noise is added. We observe that as we add more noise to the input, the modification will be more significant, which means the result image will be more colorful with the prompt "A colorful cat".

Here we use the same example to do another ablation study on various classifier-free guidance weights. We observe that as $w_\text{cfg}$ increases, the generated image can better preserve the structure of a cat face.

Extra I: Latent Code Interpolation

In this part, we experiment with latent code interpolation for gan model, in order to generate a smooth transition from one generated image to another.

Extra II: Texture Constraint

In this section, we implemented the texture constraint that use the style loss mentioned in project 4 to enforce the generated image to have similar texture as the target image. In this sections, we use the style loss as the only constraint to generate our picture, since the StyleGAN model has been pretrained on cat dataset to generate valid images of grumpy cat faces. Here are the results: