Assignment #4 - Neural Style Transfer

Background

Previous artists with strong personal styles left behind masterpieces that we appreciate. Sometimes, we wish to transfer the artistic style from these existing works to our custom scenarios while ensuring that our original content remains largely intact. The sources of both style and content can be in images, videos, or other formats, as can the generated target.

Motivation

Generate a sample image with the style of style_img and the content of content_img.

Content Reconstruction

The model VGG19 is fixed and trimed as a feature encoder, and the target image is detached from the computational graph. So the whole purpose of the gradient update is to lead the input_img using the collection of loss in one forward pass to update itself through the backpropagation. The class ContentLoss is implemented as a transparent layer, through which the input is not modified, but the contentloss regarding to the embedded target in same shape is calculated and stored. It is conducted in the feature space, rather than the pixel space.

Firstly, I tried to add this ContentLoss layer after conv4. The small learning rate for optim.LBFGS is important. With the default value of 1, the updating pace is too fast and the process is easy to fall into local minima. I implement a naive stop criterion, which stops the loss if it grows larger after one step and use lr=0.01.

content source

Add content loss after a single conv layer, conv1 ~ conv5, images resized to square.

Using features in different layers to calculate content loss leads to different results. More coarse the feature map, more blurry the reconstructed image. On the contrary, finer feature maps leads to sharper reconstructed results. Among all the outcomes, conv2 shows a better preservation of color.

Texture Synthesis

The implementation of StyleLoss is quite like the ContentLoss. What makes it focus on texture is the stocastic Gram Matrix. Adding a single layer of StyleLoss does not work well. Very similar to the content reconstruction, coarse layer focus on coarse-grined features, and fine layer focus on fine-grined features.

content source

Add style loss after a single conv layer, conv1 ~ conv5, images resized to square.

Add style loss after conv1 ~ conv5, images resized to square, using different random seed to initialize.

I prefer the result using conv1 ~ conv5 together. Since the input_img is initialized by white noise, if the random seed is fixed, then two runs will result the same. Using different seeds allow the samples to be diverse.

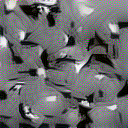

Style Transfer

I choose the hyperparameter of lr=0.01, style_weight=20, and content_weight=1, The reconstructed results from given images are shown in grid.

The source images are picasso, the scream for style and dancing, wally for content, respectively.

Using random white noise or content image with the same random seed for initialization gets different results. I think content-initialization better preserves the color of the original image.

Left: content init -- Right: random init.

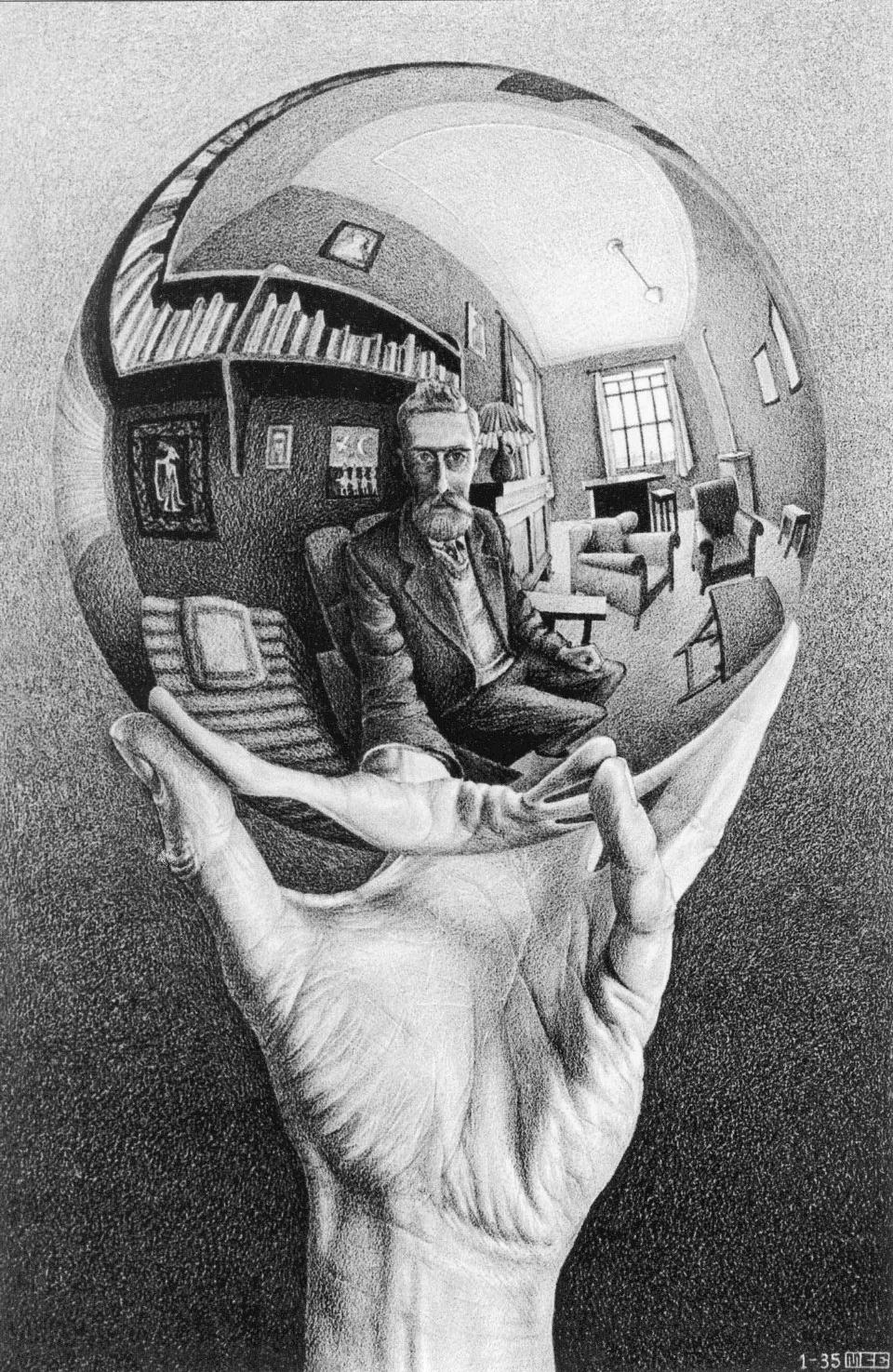

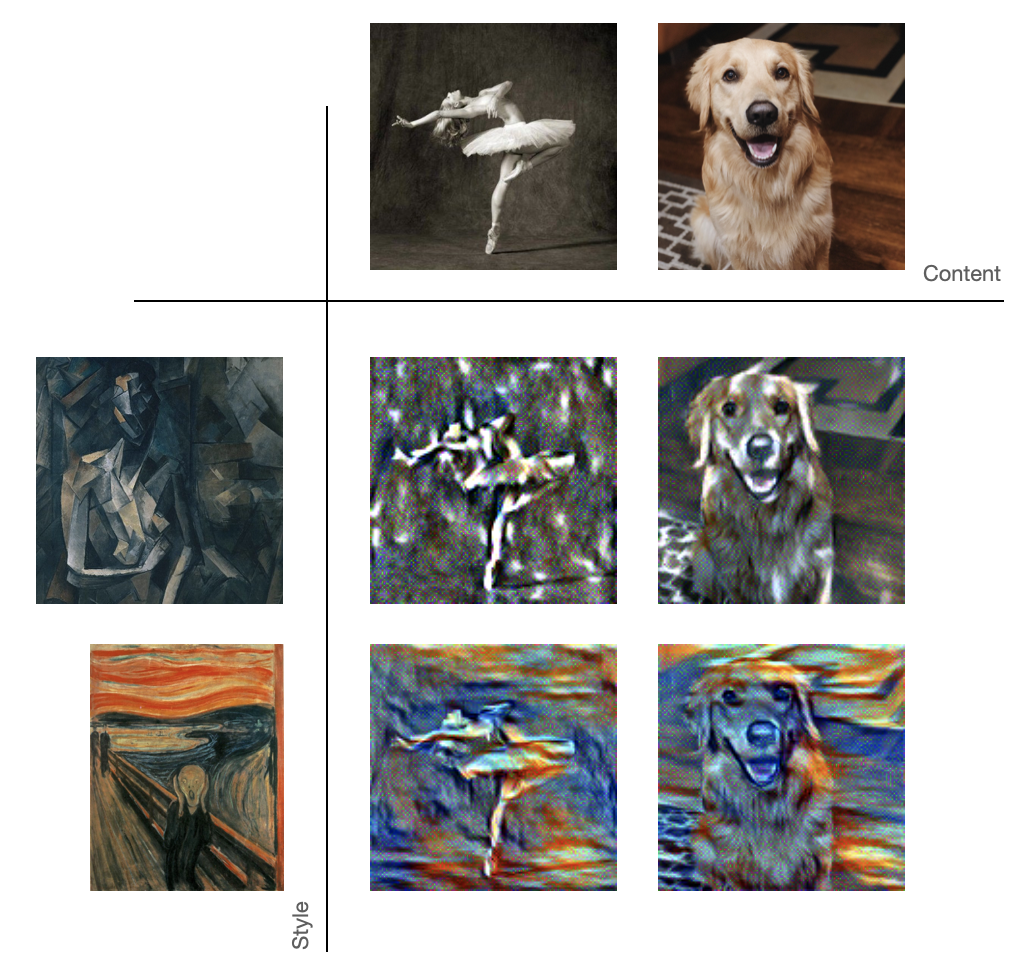

Here's the result from my own images.

Style -- Reconstructed Result -- Content.